I attended Lund University’s January 2022 Learning Laboratory in Human Factors and System Safety, and it was awesome. It was a very inspiring and well put together week.

I’ve been interested in incident and accident investigations for most of my professional life. I spent a few years in aviation, and I spent quite some time reading and talking about (aviation) incident reports. When I moved into IT, I mostly put this interest on the backburner for a while.

Over the past few years however, triggered by talks and articles by John Allspaw, J Paul Reed, Richard Cook, David Woods and many others, I rekindled my fascination with human factors, system safety, resilience engineering, and related subjects. I found my way to a lot of interesting books, articles and talks.

Through Allspaw & Reed, I already knew of Lund University’s Faculty of Engineering and specifically their MSc programme in Human Factors & System Safety. While I wasn’t looking or ready for the MSc program, I found that they also offer Learning Laboratories. Weeklong sessions that are part of the MSc programme, but can also be attended by anyone interested in the topic. This sounded like a great way to immerse myself in the topic a little deeper, and meet some like-minded folks. And so I signed up for the Jan 2022 edition: “Critical Thinking in Safety”.

Because of the pandemic, Lund University offered the Jan 2022 edition of the Learning Lab as a hybrid setup. Some folks chose to attend physically, others (like myself) attended over Zoom.

Day 1: introductions and looking into safety

The first day of the Learning Lab was all about getting to know each other, setting some expectations, arranging some logistics, and getting a good introduction into the subject matter.

Then we dug in. Using a case study from the Danish Maritime Accident Investigation Board as a backdrop, we started to discover and discuss different views of safety, different views of looking at an incident.

At the end of the day we broke into groups to further discuss and highlight the characteristics of and differences between the “orthodox” and “progressive” views on safety. Highly inspiring!

Day 2: foundations of safety science / thinking and human “error”

The day started with a recap of the previous day, and sharing the work produced by the breakout groups.

After that, we learned about the history of Heinrich, Reason and Rasmussen. Three highly influential figures in the field of safety thinking. All with their specific ideas and theories, in part shaped by their place in history.

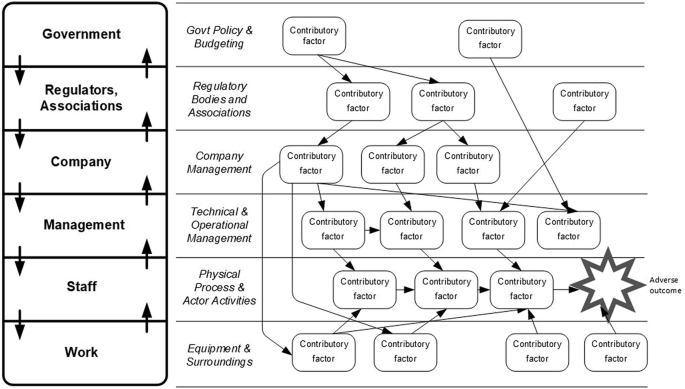

The group was then introduced to Accimaps, a “multi-layered causal diagram that arranges the various causes of an accident in terms of their causal remoteness from the accident” developed by Rasmussen.

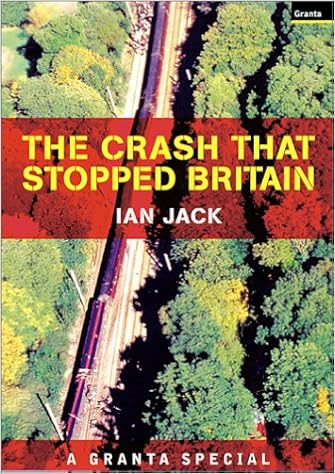

Again we broke into groups to start producing an Accimap for the Hatfield rail crash, using the book “The Train Crash that Stopped Britain” as source material.

Day 3: models of safety

Again, the day started with a recap of the previous day and some discussions about Accimaps and their usage.

Then we moved into “four models of safety”. We talked about the definition of “risk” in these models, and why accidents occur:

- risk as energy to be contained

This model is all about uncontained and harmful energy: explosions, crashes, spills. Defense against this energy consists of barriers (Swiss Cheese Model, defined by James Reason). Risk can be calculated and reliability (of barriers) can be measured. - risk as a structural property of complexity

Enter Charles Perrow’s Normal Accident Theory. We look at systems that are loosely or tightly coupled, linear or complex. - risk as a gradual acceptance

This model is defined by the idea that accidents have history, and are the result of a build-up of latent errors and events. We discussed the highly influential books by Diane Vaughan (normalization of deviance) and Scott Snook (accidents can happen when every actor behaves as expected). - risk as a control problem

This model looks at a cybernetic approach to control risk and to create resilient systems.

And again, we broke into groups to take another look at the Hatfield rail crash, through the lens of these four models of safety. The group I was in started producing a second Accimap, but using Normal Accident Theory as a base.

Day 4: human factors

After reviewing the previous day, we moved into a definition of Human Factors. We looked at the human factor, characteristics of humans, external and internal factors that shape human performance, sociotechnical interactions and the ironies of automation.

We then moved into a discussion on sociotechnical systems, systems theory and complexity, and the 1st and 2nd cognitive revolutions.

Next up was the use of language in safety science, such as the word “system”, and the use of counterfactuals (should’ve, could’ve, would’ve).

Then we were introduced to the concepts of local rationality and goal conflicts through a presentation about an investigation into a health care incident.

At the end of the day, we broke into groups again to finish our Accimaps and create a short presentation.

Day 5: wrapping up

The last day of the Learning Lab! We were graced by a visit from Jean-Christophe Le Coze. He shared a presentation about his thinking on safety science research and how that has been affected by powerful transformations (such as globalisation). After a very fruitful discussion, it was then time to wrap up the Learning Lab.

Recap

The Learning Lab week was intense and thoroughly confusing (in a good way!). The week produced some answers, but mostly a lot of new questions. Also, I added a metric ton of interesting papers, books and talks to my backlog.

I appreciate Lund University for providing the backdrop for and stimulating lots of interaction and insightful and pleasant discussions, by and with experienced professionals and practitioners from various domains and industries. It is great to see how this particular topic, and the way it is treated at Lund, is producing a truly welcoming and inspiring community.

I highly recommend attending a Learning Lab in the future!

What’s next?

Good question. I primarily attended this Learning Lab to, well, learn. Mostly from a standpoint of personal interest in the subject. But, next to the many questions about the topic itself, this week also produced a few questions of a more personal nature. I’ve always been interested in human factors and system safety, but this interest is slowly but surely converting into a passion. I want to do more with this, but I’m not sure what or how, yet. There’s also the matter of the full HFSS master program… 😉

Alas, there’s always more thinking to do!

Complete master! Back to science? Doing a full master will keep you busy for sure! Complete your bucket list for cycling before starting, I would say 🙂